Katana:下一代爬行和蜘蛛框架

Katana:下一代爬行和蜘蛛框架

Github:https://github.com/projectdiscovery/katana

特征

- 快速且完全可配置的网络抓取

- 标准和无头模式

- 主动和被动模式

- JavaScript**解析/抓取

- 可定制的自动表单填写

- 范围控制** - 预配置字段 / Regex

- 可定制的输出** - 预先配置的字段

- 输入 - STDIN、URL和LIST

- 输出 - STDOUT、FILE 和 JSON

安装

katana 需要Go 1.18才能成功安装。要安装,只需运行以下命令或从发布页面下载预编译的二进制文件。

go install github.com/projectdiscovery/katana/cmd/katana@latest安装/运行 katana 的更多选项

Docker

要安装/更新docker到最新标签 -

docker pull projectdiscovery/katana:latest使用 docker 以标准模式运行 katana -

docker run projectdiscovery/katana:latest -u https://tesla.com使用 docker 以无头模式运行 katana -

docker run projectdiscovery/katana:latest -u https://tesla.com -system-chrome -headlessUbuntu

建议安装以下先决条件 -

sudo apt update

sudo snap refresh

sudo apt install zip curl wget git

sudo snap install golang --classic

wget -q -O - https://dl-ssl.google.com/linux/linux_signing_key.pub | sudo apt-key add -

sudo sh -c 'echo "deb http://dl.google.com/linux/chrome/deb/ stable main" >> /etc/apt/sources.list.d/google.list'

sudo apt update

sudo apt install google-chrome-stable安装 Katana -

go install github.com/projectdiscovery/katana/cmd/katana@latest用法

katana -h这将显示该工具的帮助。以下是它支持的所有开关。

Katana is a fast crawler focused on execution in automation

pipelines offering both headless and non-headless crawling.

Usage:

./katana [flags]

Flags:

INPUT:

-u, -list string[] target url / list to crawl

-resume string resume scan using resume.cfg

-e, -exclude string[] exclude host matching specified filter ('cdn', 'private-ips', cidr, ip, regex)

CONFIGURATION:

-r, -resolvers string[] list of custom resolver (file or comma separated)

-d, -depth int maximum depth to crawl (default 3)

-jc, -js-crawl enable endpoint parsing / crawling in javascript file

-jsl, -jsluice enable jsluice parsing in javascript file (memory intensive)

-ct, -crawl-duration value maximum duration to crawl the target for (s, m, h, d) (default s)

-kf, -known-files string enable crawling of known files (all,robotstxt,sitemapxml), a minimum depth of 3 is required to ensure all known files are properly crawled.

-mrs, -max-response-size int maximum response size to read (default 9223372036854775807)

-timeout int time to wait for request in seconds (default 10)

-aff, -automatic-form-fill enable automatic form filling (experimental)

-fx, -form-extraction extract form, input, textarea & select elements in jsonl output

-retry int number of times to retry the request (default 1)

-proxy string http/socks5 proxy to use

-H, -headers string[] custom header/cookie to include in all http request in header:value format (file)

-config string path to the katana configuration file

-fc, -form-config string path to custom form configuration file

-flc, -field-config string path to custom field configuration file

-s, -strategy string Visit strategy (depth-first, breadth-first) (default "depth-first")

-iqp, -ignore-query-params Ignore crawling same path with different query-param values

-tlsi, -tls-impersonate enable experimental client hello (ja3) tls randomization

-dr, -disable-redirects disable following redirects (default false)

DEBUG:

-health-check, -hc run diagnostic check up

-elog, -error-log string file to write sent requests error log

HEADLESS:

-hl, -headless enable headless hybrid crawling (experimental)

-sc, -system-chrome use local installed chrome browser instead of katana installed

-sb, -show-browser show the browser on the screen with headless mode

-ho, -headless-options string[] start headless chrome with additional options

-nos, -no-sandbox start headless chrome in --no-sandbox mode

-cdd, -chrome-data-dir string path to store chrome browser data

-scp, -system-chrome-path string use specified chrome browser for headless crawling

-noi, -no-incognito start headless chrome without incognito mode

-cwu, -chrome-ws-url string use chrome browser instance launched elsewhere with the debugger listening at this URL

-xhr, -xhr-extraction extract xhr request url,method in jsonl output

PASSIVE:

-ps, -passive enable passive sources to discover target endpoints

-pss, -passive-source string[] passive source to use for url discovery (waybackarchive,commoncrawl,alienvault)

SCOPE:

-cs, -crawl-scope string[] in scope url regex to be followed by crawler

-cos, -crawl-out-scope string[] out of scope url regex to be excluded by crawler

-fs, -field-scope string pre-defined scope field (dn,rdn,fqdn) or custom regex (e.g., '(company-staging.io|company.com)') (default "rdn")

-ns, -no-scope disables host based default scope

-do, -display-out-scope display external endpoint from scoped crawling

FILTER:

-mr, -match-regex string[] regex or list of regex to match on output url (cli, file)

-fr, -filter-regex string[] regex or list of regex to filter on output url (cli, file)

-f, -field string field to display in output (url,path,fqdn,rdn,rurl,qurl,qpath,file,ufile,key,value,kv,dir,udir)

-sf, -store-field string field to store in per-host output (url,path,fqdn,rdn,rurl,qurl,qpath,file,ufile,key,value,kv,dir,udir)

-em, -extension-match string[] match output for given extension (eg, -em php,html,js)

-ef, -extension-filter string[] filter output for given extension (eg, -ef png,css)

-mdc, -match-condition string match response with dsl based condition

-fdc, -filter-condition string filter response with dsl based condition

RATE-LIMIT:

-c, -concurrency int number of concurrent fetchers to use (default 10)

-p, -parallelism int number of concurrent inputs to process (default 10)

-rd, -delay int request delay between each request in seconds

-rl, -rate-limit int maximum requests to send per second (default 150)

-rlm, -rate-limit-minute int maximum number of requests to send per minute

UPDATE:

-up, -update update katana to latest version

-duc, -disable-update-check disable automatic katana update check

OUTPUT:

-o, -output string file to write output to

-sr, -store-response store http requests/responses

-srd, -store-response-dir string store http requests/responses to custom directory

-or, -omit-raw omit raw requests/responses from jsonl output

-ob, -omit-body omit response body from jsonl output

-j, -jsonl write output in jsonl format

-nc, -no-color disable output content coloring (ANSI escape codes)

-silent display output only

-v, -verbose display verbose output

-debug display debug output

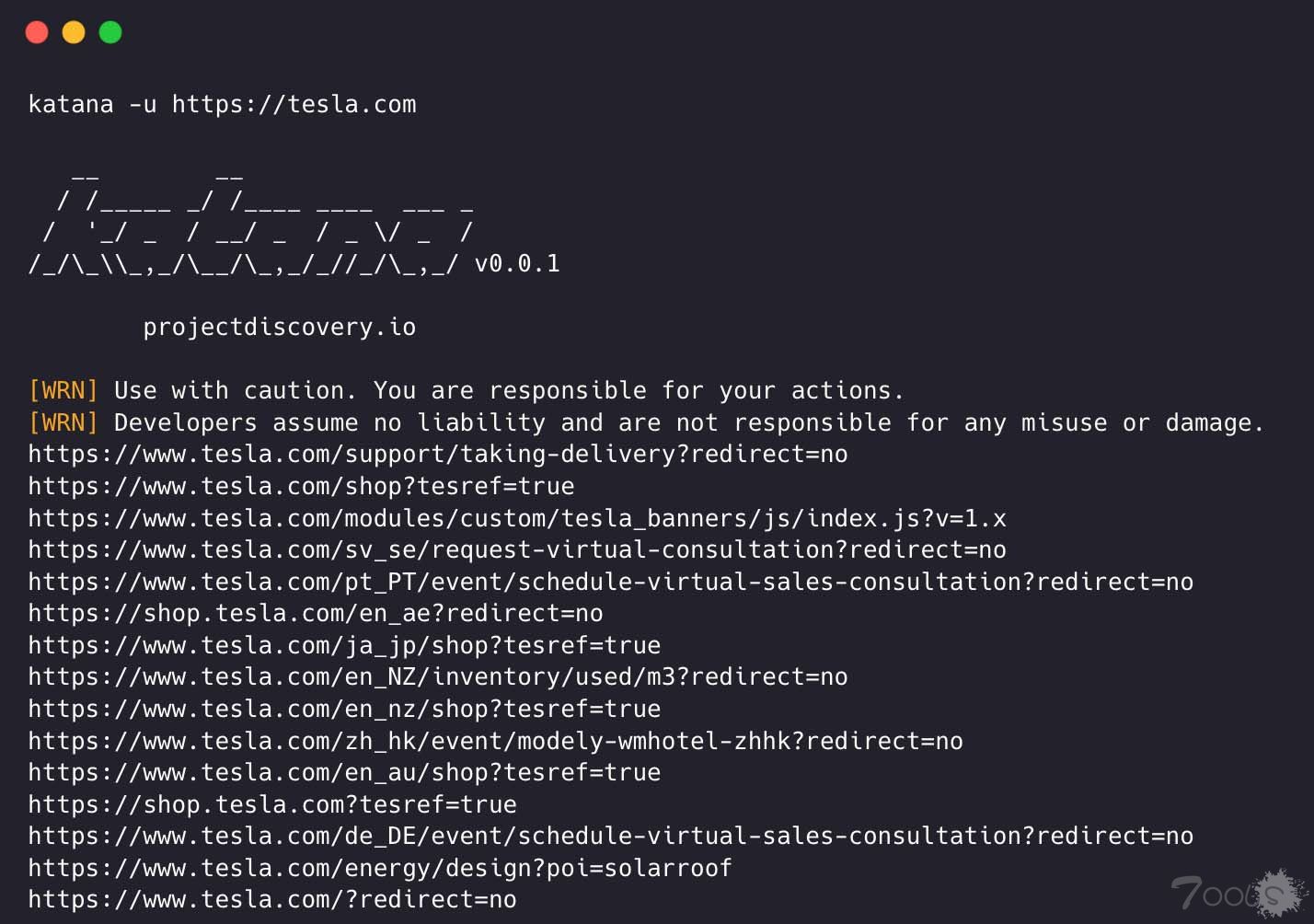

-version display project version运行 Katana

katana的输入参数

katana需要url或endpoint来抓取,并接受单个或多个输入。

使用 -u 选项可以提供输入 URL,使用逗号分隔输入可以提供多个值,同样,使用 -list 选项可以支持文件输入,此外还支持管道输入(stdin)。

URL 输入

katana -u https://tesla.com输入多个 URL(以逗号分隔)

katana -u https://tesla.com,https://google.com列表输入

$ cat url_list.txt

https://tesla.com

https://google.comkatana -list url_list.txtSTDIN(管道)输入

echo https://tesla.com | katanacat domains | httpx | katana运行 Katana 的示例 -

katana -u https://youtube.com

__ __

/ /_____ _/ /____ ____ ___ _

/ '_/ _ / __/ _ / _ \/ _ /

/_/\_\\_,_/\__/\_,_/_//_/\_,_/ v0.0.1

projectdiscovery.io

[WRN] Use with caution. You are responsible for your actions.

[WRN] Developers assume no liability and are not responsible for any misuse or damage.

https://www.youtube.com/

https://www.youtube.com/about/

https://www.youtube.com/about/press/

https://www.youtube.com/about/copyright/

https://www.youtube.com/t/contact_us/

https://www.youtube.com/creators/

https://www.youtube.com/ads/

https://www.youtube.com/t/terms

https://www.youtube.com/t/privacy

https://www.youtube.com/about/policies/

https://www.youtube.com/howyoutubeworks?utm_campaign=ytgen&utm_source=ythp&utm_medium=LeftNav&utm_content=txt&u=https%3A%2F%2Fwww.youtube.com%2Fhowyoutubeworks%3Futm_source%3Dythp%26utm_medium%3DLeftNav%26utm_campaign%3Dytgen

https://www.youtube.com/new

https://m.youtube.com/

https://www.youtube.com/s/desktop/4965577f/jsbin/desktop_polymer.vflset/desktop_polymer.js

https://www.youtube.com/s/desktop/4965577f/cssbin/www-main-desktop-home-page-skeleton.css

https://www.youtube.com/s/desktop/4965577f/cssbin/www-onepick.css

https://www.youtube.com/s/_/ytmainappweb/_/ss/k=ytmainappweb.kevlar_base.0Zo5FUcPkCg.L.B1.O/am=gAE/d=0/rs=AGKMywG5nh5Qp-BGPbOaI1evhF5BVGRZGA

https://www.youtube.com/opensearch?locale=en_GB

https://www.youtube.com/manifest.webmanifest

https://www.youtube.com/s/desktop/4965577f/cssbin/www-main-desktop-watch-page-skeleton.css

https://www.youtube.com/s/desktop/4965577f/jsbin/web-animations-next-lite.min.vflset/web-animations-next-lite.min.js

https://www.youtube.com/s/desktop/4965577f/jsbin/custom-elements-es5-adapter.vflset/custom-elements-es5-adapter.js

https://www.youtube.com/s/desktop/4965577f/jsbin/webcomponents-sd.vflset/webcomponents-sd.js

https://www.youtube.com/s/desktop/4965577f/jsbin/intersection-observer.min.vflset/intersection-observer.min.js

https://www.youtube.com/s/desktop/4965577f/jsbin/scheduler.vflset/scheduler.js

https://www.youtube.com/s/desktop/4965577f/jsbin/www-i18n-constants-en_GB.vflset/www-i18n-constants.js

https://www.youtube.com/s/desktop/4965577f/jsbin/www-tampering.vflset/www-tampering.js

https://www.youtube.com/s/desktop/4965577f/jsbin/spf.vflset/spf.js

https://www.youtube.com/s/desktop/4965577f/jsbin/network.vflset/network.js

https://www.youtube.com/howyoutubeworks/

https://www.youtube.com/trends/

https://www.youtube.com/jobs/

https://www.youtube.com/kids/爬行模式

标准模式

标准抓取模式在引擎盖下使用标准 go http 库处理 HTTP 请求/响应。这种模式速度更快,因为它没有浏览器开销。不过,它分析的是 HTTP 响应正文,没有任何 javascript 或 DOM 渲染,可能会遗漏 DOM 渲染后的端点或异步端点调用,而这些可能会发生在复杂的网络应用中,例如取决于特定浏览器事件的异步端点调用。

无头模式

无头模式挂接内部无头调用,直接在浏览器上下文中处理 HTTP 请求/响应。这样做有两个好处:

- HTTP 指纹(TLS 和用户代理)可将客户端完全识别为合法浏览器

- 覆盖范围更广,因为端点是通过分析标准原始响应(如前一种模式)和启用了 javascript 的浏览器渲染响应发现的。

无头抓取是可选的,可使用 -headless 选项启用。

以下是其他无头 CLI 选项 -

katana -h headless

Flags:

HEADLESS:

-hl, -headless enable headless hybrid crawling (experimental)

-sc, -system-chrome use local installed chrome browser instead of katana installed

-sb, -show-browser show the browser on the screen with headless mode

-ho, -headless-options string[] start headless chrome with additional options

-nos, -no-sandbox start headless chrome in --no-sandbox mode

-cdd, -chrome-data-dir string path to store chrome browser data

-scp, -system-chrome-path string use specified chrome browser for headless crawling

-noi, -no-incognito start headless chrome without incognito mode

-cwu, -chrome-ws-url string use chrome browser instance launched elsewhere with the debugger listening at this URL

-xhr, -xhr-extraction extract xhr requests运行带有无沙盒选项的无头 Chrome 浏览器,在以root用户身份运行时非常有用。

katana -u https://tesla.com -headless -no-sandbox运行无头 Chrome 浏览器,无需隐身模式,在使用本地浏览器时很有用。

katana -u https://tesla.com -headless -no-incognito在无头模式下抓取时,可使用 -headless-options指定额外的chrome选项,例如 -

katana -u https://tesla.com -headless -system-chrome -headless-options --disable-gpu,proxy-server=http://127.0.0.1:8080范围控制

如果没有范围,爬行可能是无止境的,因此 Katana 提供了多种支持来定义爬行范围。

最方便的选项是使用预定义的字段名定义范围,rdn 是字段范围的默认选项。

- rdn

- 抓取范围为根域名和所有子域(如*example.com`)(默认值) fqdn- 抓取范围为给定的子(域)(如www.example.com或api.example.com)dn- 针对域名关键字进行抓取(如example)。

katana -u https://tesla.com -fs dn要进行高级范围控制,可使用带有 regex 支持的 -cs 选项。

katana -u https://tesla.com -cs login对于范围内的多个规则,可以传递具有多行字符串/正则表达式的文件输入。

$ cat in_scope.txt

login/

admin/

app/

wordpress/katana -u https://tesla.com -cs in_scope.txt要定义不抓取的内容,可以使用 -cos 选项,也支持 regex 输入。

katana -u https://tesla.com -cos logout对于多个超出范围的规则,可以传递具有多行字符串/正则表达式的文件输入。

$ cat out_of_scope.txt

/logout

/log_outkatana -u https://tesla.com -cos out_of_scope.txtKatana 的默认范围为 *.domain,要禁用该范围,可使用 -ns 选项,也可使用该选项抓取互联网。

katana -u https://tesla.com -ns默认情况下,当使用 scope 选项时,它也适用于显示为输出的链接,因此 外部 URL 默认为排除,要覆盖此行为,可使用 -do 选项来显示目标范围 URL / Endpoint 中存在的所有外部 URL。

katana -u https://tesla.com -do以下是范围控制的所有 CLI 选项 -

katana -h scope

Flags:

SCOPE:

-cs, -crawl-scope string[] in scope url regex to be followed by crawler

-cos, -crawl-out-scope string[] out of scope url regex to be excluded by crawler

-fs, -field-scope string pre-defined scope field (dn,rdn,fqdn) (default "rdn")

-ns, -no-scope disables host based default scope

-do, -display-out-scope display external endpoint from scoped crawling爬虫配置

Katana 带有多个选项,可以根据我们的需要配置和控制抓取。

定义要depth跟踪抓取的 URL 的选项,深度越深,抓取的端点数量和抓取时间就越多。

katana -u https://tesla.com -d 5启用 JavaScript 文件解析 + 抓取 JavaScript 文件中发现的端点的选项,默认情况下处于禁用状态。

katana -u https://tesla.com -jc预定义抓取持续时间的选项,默认情况下禁用。

katana -u https://tesla.com -ct 2启用抓取robots.txt和sitemap.xml文件的选项,默认情况下禁用。

katana -u https://tesla.com -kf robotstxt,sitemapxml选择启用已知/未知字段的自动表单填充功能,可以根据需要通过更新表单配置文件来定制已知字段的值$HOME/.config/katana/form-config.yaml。

自动表格填写是一项实验性功能。

katana -u https://tesla.com -aff经过身份验证的抓取

经过身份验证的抓取涉及在 HTTP 请求中包含自定义标头或 Cookie,以访问受保护的资源。这些标头提供身份验证或授权信息,允许您抓取经过身份验证的内容/端点。您可以直接在命令行中指定标头,也可以将它们作为文件提供给 katana 以执行经过身份验证的抓取。

注意:用户需要手动执行身份验证并将会话 cookie/标头导出到文件以供 katana 使用。

向请求添加自定义标头或 cookie 的选项。

以下是向请求添加 cookie 的示例:

katana -u https://tesla.com -H 'Cookie: usrsess=AmljNrESo'也可以将 headers 或 cookies 作为文件提供。例如:

$ cat cookie.txt

Cookie: PHPSESSIONID=XXXXXXXXX

X-API-KEY: XXXXX

TOKEN=XXkatana -u https://tesla.com -H cookie.txt需要时可以配置更多选项,以下是所有与配置相关的 CLI 选项 -

katana -h config

Flags:

CONFIGURATION:

-r, -resolvers string[] list of custom resolver (file or comma separated)

-d, -depth int maximum depth to crawl (default 3)

-jc, -js-crawl enable endpoint parsing / crawling in javascript file

-ct, -crawl-duration int maximum duration to crawl the target for

-kf, -known-files string enable crawling of known files (all,robotstxt,sitemapxml)

-mrs, -max-response-size int maximum response size to read (default 9223372036854775807)

-timeout int time to wait for request in seconds (default 10)

-aff, -automatic-form-fill enable automatic form filling (experimental)

-fx, -form-extraction enable extraction of form, input, textarea & select elements

-retry int number of times to retry the request (default 1)

-proxy string http/socks5 proxy to use

-H, -headers string[] custom header/cookie to include in request

-config string path to the katana configuration file

-fc, -form-config string path to custom form configuration file

-flc, -field-config string path to custom field configuration file

-s, -strategy string Visit strategy (depth-first, breadth-first) (default "depth-first")连接到活动浏览器会话

Katana 还可以连接到用户已登录并经过身份验证的活动浏览器会话,并使用它来进行抓取。唯一的要求是启动启用了远程调试的浏览器。

以下是启动启用远程调试的 Chrome 浏览器并将其与 Katana 一起使用的示例 -

步骤 1)首先找到 chrome 可执行文件的路径

| 操作系统 | Chromium 可执行文件位置 | Google Chrome 可执行文件位置 |

|---|---|---|

| Windows (64-bit) | C:\Program Files (x86)\Google\Chromium\Application\chrome.exe |

C:\Program Files (x86)\Google\Chrome\Application\chrome.exe |

| Windows (32-bit) | C:\Program Files\Google\Chromium\Application\chrome.exe |

C:\Program Files\Google\Chrome\Application\chrome.exe |

| macOS | /Applications/Chromium.app/Contents/MacOS/Chromium |

/Applications/Google Chrome.app/Contents/MacOS/Google Chrome |

| Linux | /usr/bin/chromium |

/usr/bin/google-chrome |

步骤 2) 启动启用远程调试的 chrome,它将返回 websocker url。例如,在 MacOS 上,您可以使用以下命令启动启用远程调试的 chrome -

$ /Applications/Google\ Chrome.app/Contents/MacOS/Google\ Chrome --remote-debugging-port=9222

DevTools listening on ws://127.0.0.1:9222/devtools/browser/c5316c9c-19d6-42dc-847a-41d1aeebf7d6现在登录您想要抓取的网站并保持浏览器打开。

步骤 3)现在使用带有 Katana 的 websocket url 连接到活动浏览器会话并抓取网站

katana -headless -u https://tesla.com -cwu ws://127.0.0.1:9222/devtools/browser/c5316c9c-19d6-42dc-847a-41d1aeebf7d6 -no-incognito注意:可以使用

-cdd选项指定自定义 Chrome 浏览器数据目录来存储浏览器数据和 cookie,但如果 cookie 仅设置为Session或在一定时间后过期,则不会保存会话数据。

筛选器

Katana 带有内置字段,可用于过滤所需信息的输出,-f选项可用于指定任何可用字段。

-f, -field string field to display in output (url,path,fqdn,rdn,rurl,qurl,qpath,file,key,value,kv,dir,udir)下面是一个表格,其中列出了每个字段的示例以及使用时的预期输出 -

| FIELD | 描述 | 示例 |

|---|---|---|

url |

URL Endpoint | https://admin.projectdiscovery.io/admin/login?user=admin&password=admin |

qurl |

URL including query param | https://admin.projectdiscovery.io/admin/login.php?user=admin&password=admin |

qpath |

Path including query param | /login?user=admin&password=admin |

path |

URL Path | https://admin.projectdiscovery.io/admin/login |

fqdn |

Fully Qualified Domain name | admin.projectdiscovery.io |

rdn |

Root Domain name | projectdiscovery.io |

rurl |

Root URL | https://admin.projectdiscovery.io |

ufile |

URL with File | https://admin.projectdiscovery.io/login.js |

file |

Filename in URL | login.php |

key |

Parameter keys in URL | user,password |

value |

Parameter values in URL | admin,admin |

kv |

Keys=Values in URL | user=admin&password=admin |

dir |

URL Directory name | /admin/ |

udir |

URL with Directory | https://admin.projectdiscovery.io/admin/ |

以下是使用字段选项仅显示其中包含查询参数的所有 URL 的示例 -

katana -u https://tesla.com -f qurl -silent

https://shop.tesla.com/en_au?redirect=no

https://shop.tesla.com/en_nz?redirect=no

https://shop.tesla.com/product/men_s-raven-lightweight-zip-up-bomber-jacket?sku=1740250-00-A

https://shop.tesla.com/product/tesla-shop-gift-card?sku=1767247-00-A

https://shop.tesla.com/product/men_s-chill-crew-neck-sweatshirt?sku=1740176-00-A

https://www.tesla.com/about?redirect=no

https://www.tesla.com/about/legal?redirect=no

https://www.tesla.com/findus/list?redirect=no自定义字段

您可以使用正则表达式规则创建自定义字段,以从页面响应中提取和存储特定信息。这些自定义字段使用 YAML 配置文件定义,并从默认位置加载$HOME/.config/katana/field-config.yaml。或者,您可以使用-flc选项从其他位置加载自定义字段配置文件。以下是自定义字段示例。

- name: email

type: regex

regex:

- '([a-zA-Z0-9._-]+@[a-zA-Z0-9._-]+\.[a-zA-Z0-9_-]+)'

- '([a-zA-Z0-9+._-]+@[a-zA-Z0-9._-]+\.[a-zA-Z0-9_-]+)'

- name: phone

type: regex

regex:

- '\d{3}-\d{8}|\d{4}-\d{7}'定义自定义字段时,支持以下属性:

- name (required)

name 属性的值用作 -

fieldcli 选项值。

- type (required)

自定义属性的类型,当前支持的选项为 -

regex

- part (optional)

响应中要提取信息的部分。默认值为

response,其中包括标头和正文。其他可能值为header和body。

- group (optional)

您可以使用此属性在正则表达式中选择特定的匹配组,例如:

group: 1

使用自定义字段运行 katana:

katana -u https://tesla.com -f email,phone为了补充field在运行时过滤输出的有用选项,有-sf, -store-fields一个选项的工作方式与字段选项完全相同,只是它不是进行过滤,而是将所有信息存储在katana_field按目标 url 排序的目录下的磁盘上。

katana -u https://tesla.com -sf key,fqdn,qurl -silent$ ls katana_field/

https_www.tesla.com_fqdn.txt

https_www.tesla.com_key.txt

https_www.tesla.com_qurl.txt该-store-field选项可用于收集信息以构建用于各种目的的目标单词表,包括但不限于:

- 识别最常用的参数

- 发现常用路径

- 查找常用文件

- 识别相关或未知的子域名

Katana 滤镜

可以使用选项轻松匹配特定扩展名的抓取输出,-em以确保仅显示包含给定扩展名的输出。

katana -u https://tesla.com -silent -em js,jsp,json可以使用选项轻松过滤抓取输出中的特定扩展名-ef,以确保删除所有包含给定扩展名的 URL。

katana -u https://tesla.com -silent -ef css,txt,md-match-regex或标志-mr允许您使用正则表达式过滤输出 URL。使用此标志时,只有与指定正则表达式匹配的 URL 才会打印在输出中。

katana -u https://tesla.com -mr 'https://shop\.tesla\.com/*' -silent-filter-regex或标志-fr允许您使用正则表达式过滤输出 URL。使用此标志时,它将跳过与指定正则表达式匹配的 URL。

katana -u https://tesla.com -fr 'https://www\.tesla\.com/*' -silent高级过滤

Katana 支持基于 DSL 的表达式,实现高级匹配和过滤功能:

- 要匹配具有 200 状态代码的端点:

katana -u https://www.hackerone.com -mdc 'status_code == 200' - 要匹配包含

default且状态代码不是 403 的端点:katana -u https://www.hackerone.com -mdc 'contains(endpoint, "default") && status_code != 403' - 要将端点与 PHP 技术匹配:

katana -u https://www.hackerone.com -mdc 'contains(to_lower(technologies), "php")' - 要过滤掉在 Cloudflare 上运行的端点:

katana -u https://www.hackerone.com -fdc 'contains(to_lower(technologies), "cloudflare")'DSL 函数可应用于 jsonl 输出中的任何键。

以下是其他过滤选项 -

katana -h filter

Flags:

FILTER:

-mr, -match-regex string[] regex or list of regex to match on output url (cli, file)

-fr, -filter-regex string[] regex or list of regex to filter on output url (cli, file)

-f, -field string field to display in output (url,path,fqdn,rdn,rurl,qurl,qpath,file,ufile,key,value,kv,dir,udir)

-sf, -store-field string field to store in per-host output (url,path,fqdn,rdn,rurl,qurl,qpath,file,ufile,key,value,kv,dir,udir)

-em, -extension-match string[] match output for given extension (eg, -em php,html,js)

-ef, -extension-filter string[] filter output for given extension (eg, -ef png,css)

-mdc, -match-condition string match response with dsl based condition

-fdc, -filter-condition string filter response with dsl based condition速率限制

如果不遵守目标网站的限制,抓取时很容易被阻止/禁止,Katana 提供了多个选项来调整抓取速度,以实现我们想要的速度快/慢。

在抓取过程中,Katana 发出的每个新请求之间都会出现以秒为单位的延迟,默认情况下处于禁用状态。

katana -u https://tesla.com -delay 20用于控制每个目标同时获取的 URL 数量的选项。

katana -u https://tesla.com -c 20从列表输入中定义同时要处理的目标数量的选项。

katana -u https://tesla.com -p 20用于定义每秒可发出的最大请求数的选项。

katana -u https://tesla.com -rl 100用于定义每分钟发出的最大请求数的选项。

katana -u https://tesla.com -rlm 500以下是速率限制控制的所有长/短 CLI 选项 -

katana -h rate-limit

Flags:

RATE-LIMIT:

-c, -concurrency int number of concurrent fetchers to use (default 10)

-p, -parallelism int number of concurrent inputs to process (default 10)

-rd, -delay int request delay between each request in seconds

-rl, -rate-limit int maximum requests to send per second (default 150)

-rlm, -rate-limit-minute int maximum number of requests to send per minute输出

Katana 既支持纯文本格式的文件输出,也支持 JSON 格式的文件输出,其中包括附加信息,如 source, tag, 和 attribute 名称,以便与所发现的端点相关联。

默认情况下,Katana 以纯文本格式输出抓取的端点。可以使用 -output 选项将结果写入文件。

katana -u https://example.com -no-scope -output example_endpoints.txtkatana -u https://example.com -jsonl | jq .{

"timestamp": "2023-03-20T16:23:58.027559+05:30",

"request": {

"method": "GET",

"endpoint": "https://example.com",

"raw": "GET / HTTP/1.1\r\nHost: example.com\r\nUser-Agent: Mozilla/5.0 (Macintosh; Intel Mac OS X 11_1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4280.88 Safari/537.36\r\nAccept-Encoding: gzip\r\n\r\n"

},

"response": {

"status_code": 200,

"headers": {

"accept_ranges": "bytes",

"expires": "Mon, 27 Mar 2023 10:53:58 GMT",

"last_modified": "Thu, 17 Oct 2019 07:18:26 GMT",

"content_type": "text/html; charset=UTF-8",

"server": "ECS (dcb/7EA3)",

"vary": "Accept-Encoding",

"etag": "\"3147526947\"",

"cache_control": "max-age=604800",

"x_cache": "HIT",

"date": "Mon, 20 Mar 2023 10:53:58 GMT",

"age": "331239"

},

"body": "<!doctype html>\n<html>\n<head>\n <title>Example Domain</title>\n\n <meta charset=\"utf-8\" />\n <meta http-equiv=\"Content-type\" content=\"text/html; charset=utf-8\" />\n <meta name=\"viewport\" content=\"width=device-width, initial-scale=1\" />\n <style type=\"text/css\">\n body {\n background-color: #f0f0f2;\n margin: 0;\n padding: 0;\n font-family: -apple-system, system-ui, BlinkMacSystemFont, \"Segoe UI\", \"Open Sans\", \"Helvetica Neue\", Helvetica, Arial, sans-serif;\n \n }\n div {\n width: 600px;\n margin: 5em auto;\n padding: 2em;\n background-color: #fdfdff;\n border-radius: 0.5em;\n box-shadow: 2px 3px 7px 2px rgba(0,0,0,0.02);\n }\n a:link, a:visited {\n color: #38488f;\n text-decoration: none;\n }\n [@media](https://www.t00ls.com/space-uid-666.html) (max-width: 700px) {\n div {\n margin: 0 auto;\n width: auto;\n }\n }\n </style> \n</head>\n\n<body>\n<div>\n <h1>Example Domain</h1>\n <p>This domain is for use in illustrative examples in documents. You may use this\n domain in literature without prior coordination or asking for permission.</p>\n <p><a href=\"https://www.iana.org/domains/example\">More information...</a></p>\n</div>\n</body>\n</html>\n",

"technologies": [

"Azure",

"Amazon ECS",

"Amazon Web Services",

"Docker",

"Azure CDN"

],

"raw": "HTTP/1.1 200 OK\r\nContent-Length: 1256\r\nAccept-Ranges: bytes\r\nAge: 331239\r\nCache-Control: max-age=604800\r\nContent-Type: text/html; charset=UTF-8\r\nDate: Mon, 20 Mar 2023 10:53:58 GMT\r\nEtag: \"3147526947\"\r\nExpires: Mon, 27 Mar 2023 10:53:58 GMT\r\nLast-Modified: Thu, 17 Oct 2019 07:18:26 GMT\r\nServer: ECS (dcb/7EA3)\r\nVary: Accept-Encoding\r\nX-Cache: HIT\r\n\r\n<!doctype html>\n<html>\n<head>\n <title>Example Domain</title>\n\n <meta charset=\"utf-8\" />\n <meta http-equiv=\"Content-type\" content=\"text/html; charset=utf-8\" />\n <meta name=\"viewport\" content=\"width=device-width, initial-scale=1\" />\n <style type=\"text/css\">\n body {\n background-color: #f0f0f2;\n margin: 0;\n padding: 0;\n font-family: -apple-system, system-ui, BlinkMacSystemFont, \"Segoe UI\", \"Open Sans\", \"Helvetica Neue\", Helvetica, Arial, sans-serif;\n \n }\n div {\n width: 600px;\n margin: 5em auto;\n padding: 2em;\n background-color: #fdfdff;\n border-radius: 0.5em;\n box-shadow: 2px 3px 7px 2px rgba(0,0,0,0.02);\n }\n a:link, a:visited {\n color: #38488f;\n text-decoration: none;\n }\n [@media](https://www.t00ls.com/space-uid-666.html) (max-width: 700px) {\n div {\n margin: 0 auto;\n width: auto;\n }\n }\n </style> \n</head>\n\n<body>\n<div>\n <h1>Example Domain</h1>\n <p>This domain is for use in illustrative examples in documents. You may use this\n domain in literature without prior coordination or asking for permission.</p>\n <p><a href=\"https://www.iana.org/domains/example\">More information...</a></p>\n</div>\n</body>\n</html>\n"

}

}该-store-response选项允许将所有抓取的端点请求和响应写入文本文件。使用此选项时,包括请求和响应的文本文件将写入 katana_response 目录。如果您想指定自定义目录,可以使用该-store-response-dir选项。

katana -u https://example.com -no-scope -store-response$ cat katana_response/index.txt

katana_response/example.com/327c3fda87ce286848a574982ddd0b7c7487f816.txt https://example.com (200 OK)

katana_response/www.iana.org/bfc096e6dd93b993ca8918bf4c08fdc707a70723.txt http://www.iana.org/domains/reserved (200 OK)注意:

-store-response该模式不支持选项-headless。

以下是与输出相关的其他 CLI 选项 -

katana -h output

OUTPUT:

-o, -output string file to write output to

-sr, -store-response store http requests/responses

-srd, -store-response-dir string store http requests/responses to custom directory

-j, -json write output in JSONL(ines) format

-nc, -no-color disable output content coloring (ANSI escape codes)

-silent display output only

-v, -verbose display verbose output

-version display project versionKatana 作为库

通过创建一个 Option 结构实例,并在其中填充通过 CLI 指定的相同选项,就可以将 katana 用作一个库。使用这些选项,你可以创建 crawlerOptions,从而创建标准的或混合的 crawler。

应调用 crawler.Crawl 方法来抓取输入内容。

package main

import (

"math"

"github.com/projectdiscovery/gologger"

"github.com/projectdiscovery/katana/pkg/engine/standard"

"github.com/projectdiscovery/katana/pkg/output"

"github.com/projectdiscovery/katana/pkg/types"

)

func main() {

options := &types.Options{

MaxDepth: 3, // Maximum depth to crawl

FieldScope: "rdn", // Crawling Scope Field

BodyReadSize: math.MaxInt, // Maximum response size to read

Timeout: 10, // Timeout is the time to wait for request in seconds

Concurrency: 10, // Concurrency is the number of concurrent crawling goroutines

Parallelism: 10, // Parallelism is the number of urls processing goroutines

Delay: 0, // Delay is the delay between each crawl requests in seconds

RateLimit: 150, // Maximum requests to send per second

Strategy: "depth-first", // Visit strategy (depth-first, breadth-first)

OnResult: func(result output.Result) { // Callback function to execute for result

gologger.Info().Msg(result.Request.URL)

},

}

crawlerOptions, err := types.NewCrawlerOptions(options)

if err != nil {

gologger.Fatal().Msg(err.Error())

}

defer crawlerOptions.Close()

crawler, err := standard.New(crawlerOptions)

if err != nil {

gologger.Fatal().Msg(err.Error())

}

defer crawler.Close()

var input = "https://www.hackerone.com"

err = crawler.Crawl(input)

if err != nil {

gologger.Warning().Msgf("Could not crawl %s: %s", input, err.Error())

}

}

评论8次

这个好用么

好用谢谢

试试这个爬虫

这个爬虫配置还是很方便,正在使用中

用起来怎么样 和rad那个更好用

试试又增加一个武器库

楼主太厉害了,写的很详细

拿走了 试试这个爬虫效果咋样